🃏 Inference for a Single Mean

“The more I love humanity in general, the less I love man in particular. ― Fyodor Dostoyevsky, The Brothers Karamazov

…neither let us despair over how small our successes are. For however much our successes fall short of our desire, our efforts aren’t in vain when we are farther along today than yesterday.

— John Calvin

Plot Fonts and Theme

Show the Code

library(systemfonts)

library(showtext)

## Clean the slate

systemfonts::clear_local_fonts()

systemfonts::clear_registry()

##

showtext_opts(dpi = 96) # set DPI for showtext

sysfonts::font_add(

family = "Alegreya",

regular = "../../../../../../fonts/Alegreya-Regular.ttf",

bold = "../../../../../../fonts/Alegreya-Bold.ttf",

italic = "../../../../../../fonts/Alegreya-Italic.ttf",

bolditalic = "../../../../../../fonts/Alegreya-BoldItalic.ttf"

)

sysfonts::font_add(

family = "Roboto Condensed",

regular = "../../../../../../fonts/RobotoCondensed-Regular.ttf",

bold = "../../../../../../fonts/RobotoCondensed-Bold.ttf",

italic = "../../../../../../fonts/RobotoCondensed-Italic.ttf",

bolditalic = "../../../../../../fonts/RobotoCondensed-BoldItalic.ttf"

)

showtext_auto(enable = TRUE) # enable showtext

##

theme_custom <- function() {

font <- "Alegreya" # assign font family up front

theme_classic(base_size = 14, base_family = font) %+replace% # replace elements we want to change

theme(

text = element_text(family = font), # set base font family

# text elements

plot.title = element_text( # title

family = font, # set font family

size = 24, # set font size

face = "bold", # bold typeface

hjust = 0, # left align

margin = margin(t = 5, r = 0, b = 5, l = 0)

), # margin

plot.title.position = "plot",

plot.subtitle = element_text( # subtitle

family = font, # font family

size = 14, # font size

hjust = 0, # left align

margin = margin(t = 5, r = 0, b = 10, l = 0)

), # margin

plot.caption = element_text( # caption

family = font, # font family

size = 9, # font size

hjust = 1

), # right align

plot.caption.position = "plot", # right align

axis.title = element_text( # axis titles

family = "Roboto Condensed", # font family

size = 12

), # font size

axis.text = element_text( # axis text

family = "Roboto Condensed", # font family

size = 9

), # font size

axis.text.x = element_text( # margin for axis text

margin = margin(5, b = 10)

)

# since the legend often requires manual tweaking

# based on plot content, don't define it here

)

}Show the Code

```{r}

#| cache: false

#| code-fold: true

## Set the theme

theme_set(new = theme_custom())

## Use available fonts in ggplot text geoms too!

update_geom_defaults(geom = "text", new = list(

family = "Roboto Condensed",

face = "plain",

size = 3.5,

color = "#2b2b2b"

))

```

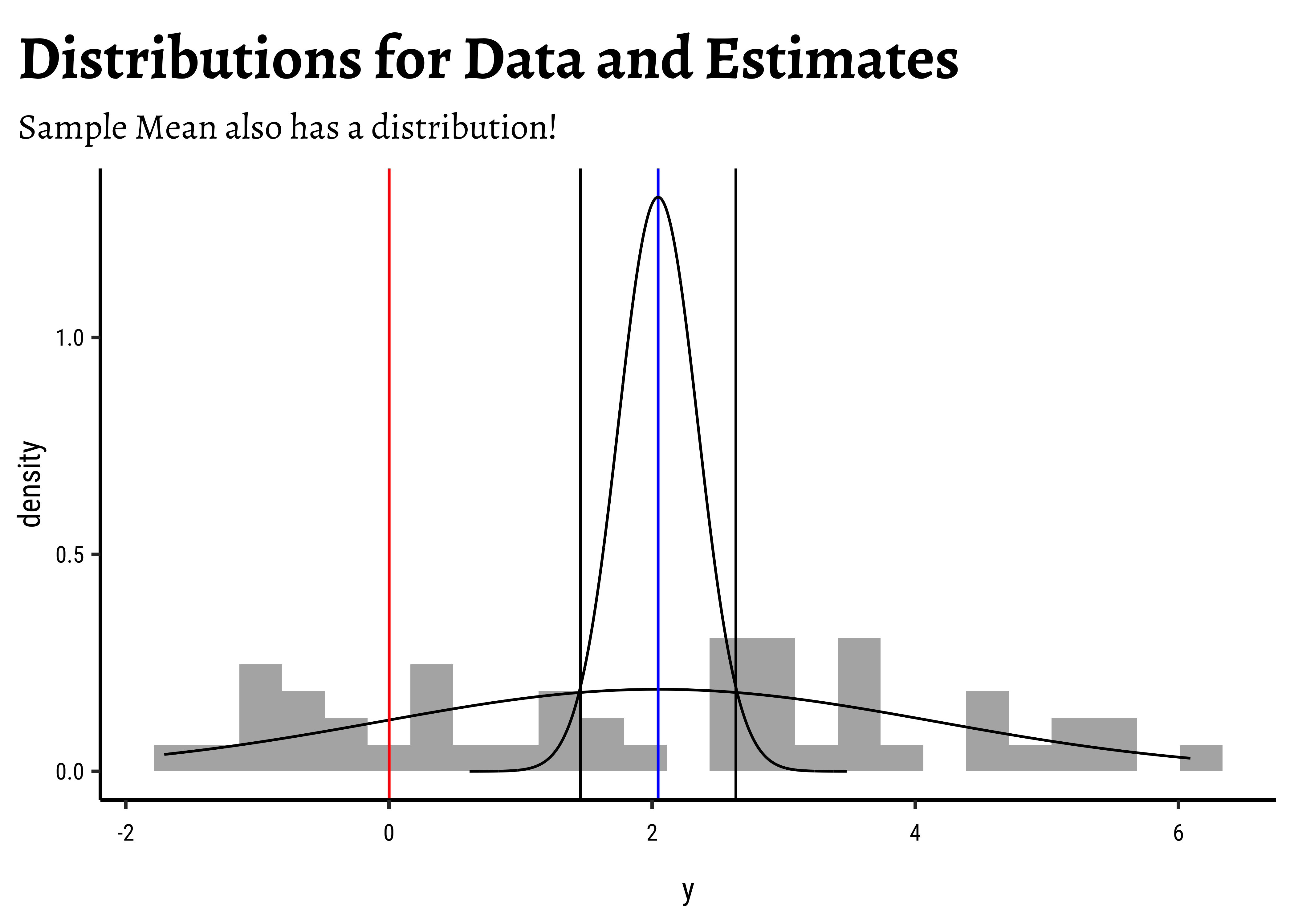

In this module, we will answer a basic Question: What is the mean

Recall that the mean is the first of our Summary Statistics. We wish to know more about the mean of the population from which we have drawn our data sample.

We will do this is in several ways, based on the assumptions we are willing to adopt about our data. First we will use a toy dataset with one “imaginary” sample, normally distributed and made up of 50 observations. Since we “know the answer” we will be able to build up some belief in the tests and procedures, which we will dig into to form our intuitions.

We will then use a real-world dataset to make inferences on the means of Quant variables therein, and decide what that could tell us.

As we will notice, the process of Statistical Inference is an attitude: ain’t nothing happenin’! We look at data that we might have received or collected ourselves, and look at it with this attitude, seemingly, of some disbelief. We state either that:

- there is really nothing happening with our research question, and that anything we see in the data is the outcome of random chance.

- the value/statistic indicated by the data is off the mark and ought to be something else.

We then calculate how slim the chances are of the given data sample showing up like that, given our belief. It is a distance measurement of sorts. If those chances are too low, then that might alter our belief. This is the attitude that lies at the heart of Hypothesis Testing.

The calculation of chances is both a logical, and a possible procedure since we are dealing with samples from a population. If many other samples give us quite different estimates, then we would discredit the one we derive from it.

Each test we perform will mechanize this attitude in different ways, based on assumptions and conveniences. (And history)

Since the CLT assumes the sample is normally-distributed, let us generate a sample that is just so:

y <dbl> | ||||

|---|---|---|---|---|

| 2.95547807 | ||||

| 2.99236565 | ||||

| 0.28083140 | ||||

| 0.34188009 | ||||

| 1.35685383 | ||||

| -0.60754080 | ||||

| -0.84297320 | ||||

| 5.48982989 | ||||

| 1.42344128 | ||||

| -0.61773144 |

# Set graph theme

theme_set(new = theme_custom())

#

mydata %>%

gf_density(~y) %>%

gf_fitdistr(dist = "dnorm") %>%

gf_labs(

title = "Densities of Original Data Variables",

subtitle = "Compared with Normal Density"

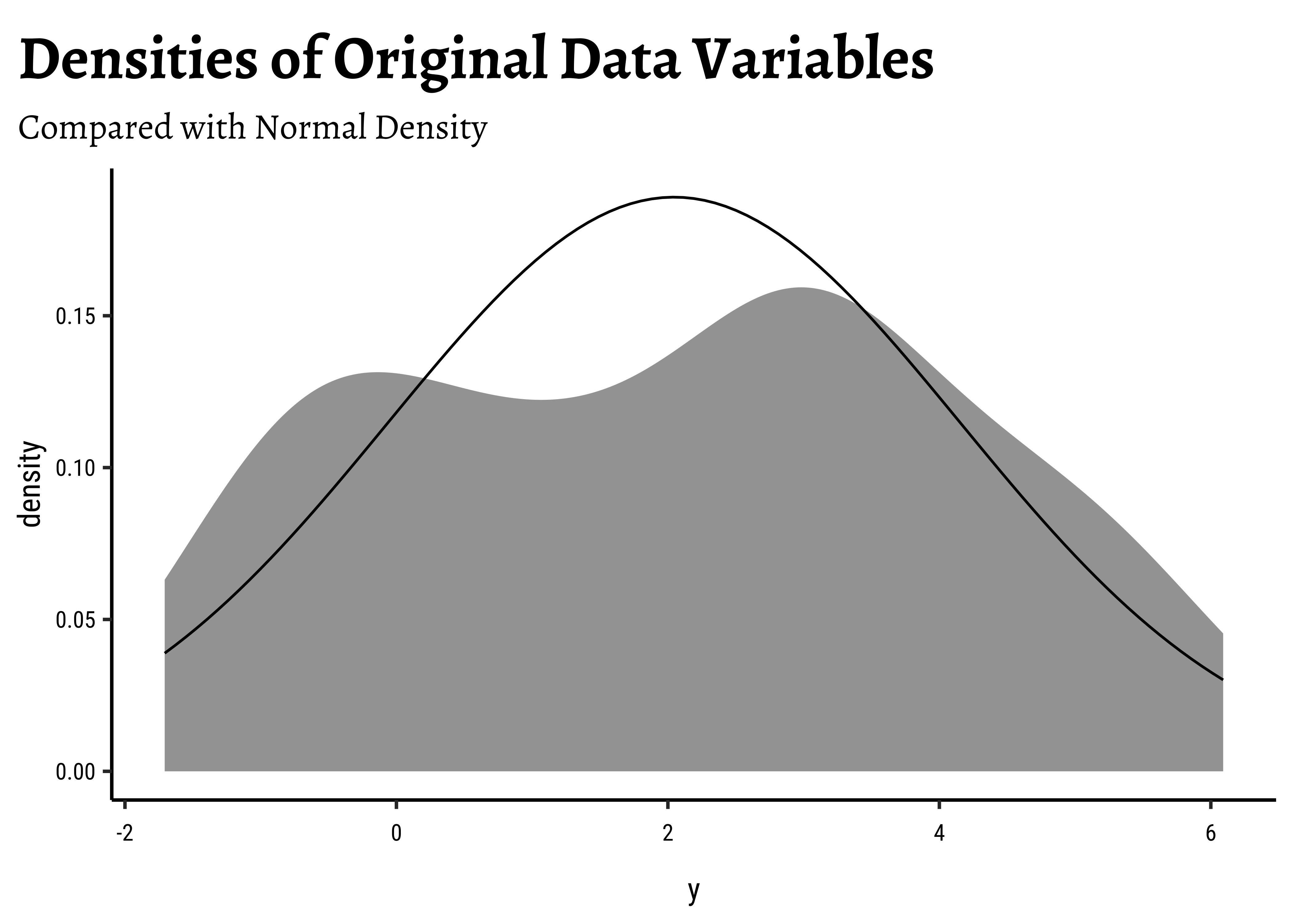

)- The variable

- It does not seem to be normally distributed…

- So assumptions are not always valid…

Research Question

Research Questions are always about the population! Here goes:

Could the mean of the population

Assumptions

The y-variable does not appear to be normally distributed. This would affect the test we can use to make inferences about the population mean.

There are formal tests for normality too. We will do them in the next case study. For now, let us proceed naively.

Inference

A. Model

We have

B. Code

# t-test

t1 <- mosaic::t_test(

y, # Name of variable

mu = 0, # belief of population mean

alternative = "two.sided"

) %>% # Check both sides

broom::tidy() # Make results presentable, and plottable!!

t1estimate <dbl> | statistic <dbl> | p.value <dbl> | parameter <dbl> | conf.low <dbl> | conf.high <dbl> | method <chr> | alternative <chr> |

|---|---|---|---|---|---|---|---|

| 2.045689 | 6.785596 | 1.425495e-08 | 49 | 1.439852 | 2.651526 | One Sample t-test | two.sided |

Recall how we calculated means, standard deviations from data (samples). If we could measure the entire population, then there would be no uncertainty in our estimates for means and sd-s. Since we are forced to sample, we can only estimate population parameters based on the sample estimates and state how much off we might be.

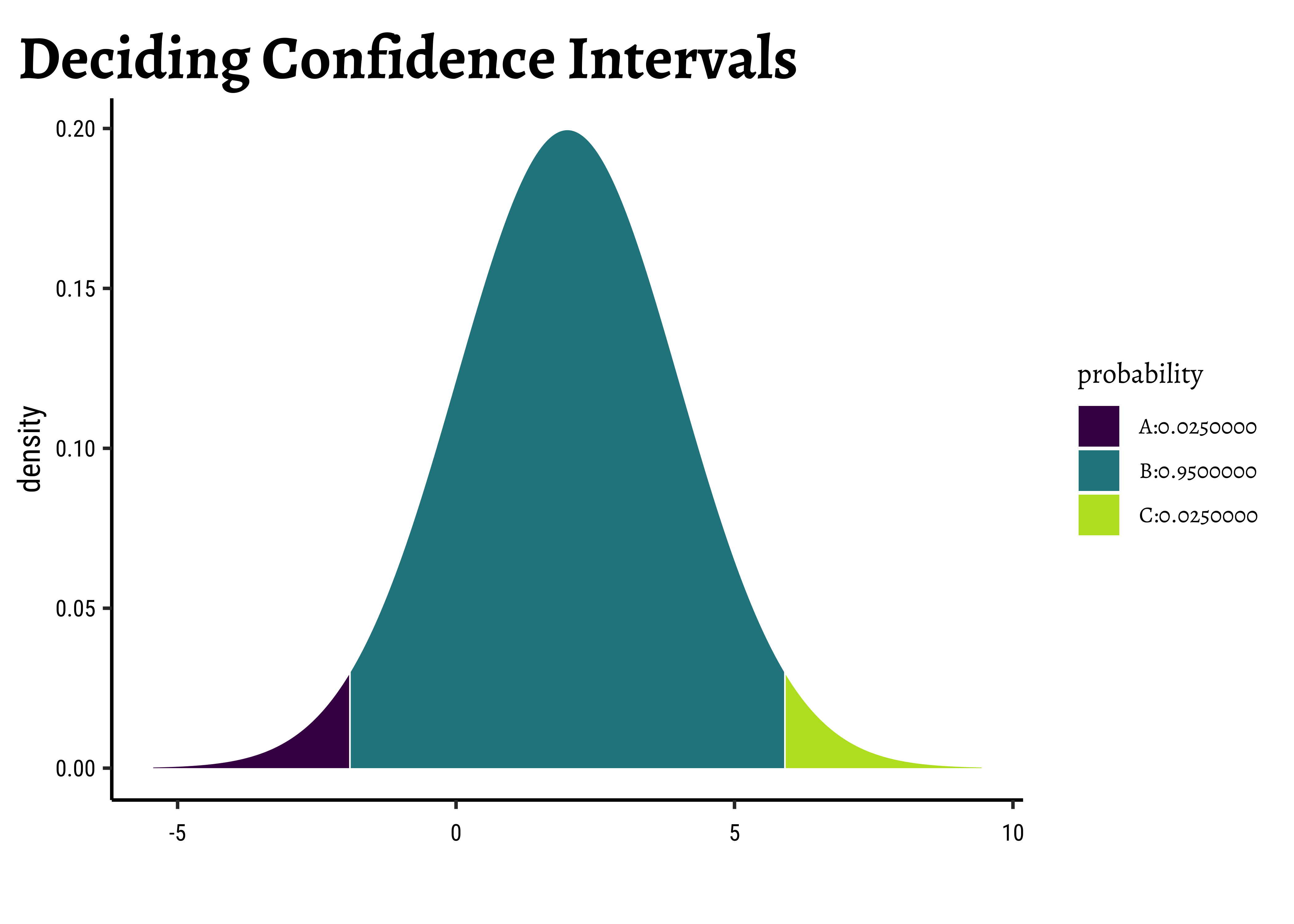

Confidence intervals for population means are given by:

Assuming the y is normally-distributed, the

So estimate is

“Signed Rank” Values: A Small Digression

When the Quant variable we want to test for is not normally distributed, we need to think of other ways to perform our inference. Our assumption about normality has been invalidated.

Most statistical tests use the actual values of the data variables. However, in these cases where assumptions are invalidated, the data are used in rank-transformed sense/order. In some cases the signed-rank of the data values is used instead of the data itself. The signed ranks are then tested to see if there are more of one polarity than the other, roughly speaking, and how probable this could be.

Signed Rank is calculated as follows:

- Take the absolute value of each observation in a sample

- Place the ranks in order of (absolute magnitude). The smallest number has rank = 1 and so on.

- Give each of the ranks the sign of the original observation ( + or -)

Since we are dealing with the mean, the sign of the rank becomes important to use.

A. Model

B. Code

# Standard Wilcoxon Signed_Rank Test

t2 <- wilcox.test(y, # variable name

mu = 0, # belief

alternative = "two.sided",

conf.int = TRUE,

conf.level = 0.95

) %>%

broom::tidy()

t2estimate <dbl> | statistic <dbl> | p.value <dbl> | conf.low <dbl> | conf.high <dbl> | method <chr> | alternative <chr> |

|---|---|---|---|---|---|---|

| 2.04533 | 1144 | 1.036606e-06 | 1.383205 | 2.721736 | Wilcoxon signed rank test with continuity correction | two.sided |

# Can also do this equivalently

# t-test with signed_rank data

t3 <- t.test(signed_rank(y),

mu = 0,

alternative = "two.sided",

conf.int = TRUE,

conf.level = 0.95

) %>%

broom::tidy()

t3estimate <dbl> | statistic <dbl> | p.value <dbl> | parameter <dbl> | conf.low <dbl> | conf.high <dbl> | method <chr> | alternative <chr> |

|---|---|---|---|---|---|---|---|

| 20.26 | 6.700123 | 1.933926e-08 | 49 | 14.1834 | 26.3366 | One Sample t-test | two.sided |

Again, the confidence intervals do not straddle

Note how the Wilcoxon Test reports results about

We saw from the diagram created by Allen Downey that there is only one test 1! We will now use this philosophy to develop a technique that allows us to mechanize several Statistical Models in that way, with nearly identical code.

We can use two packages in R, mosaic to develop our intuition for what are called permutation based statistical tests; and a more recent package called infer in R which can do pretty much all of this, including visualization.

We will stick with mosaic for now. We will do a permutation test first, and then a bootstrap test. In subsequent modules, we will use infer also.

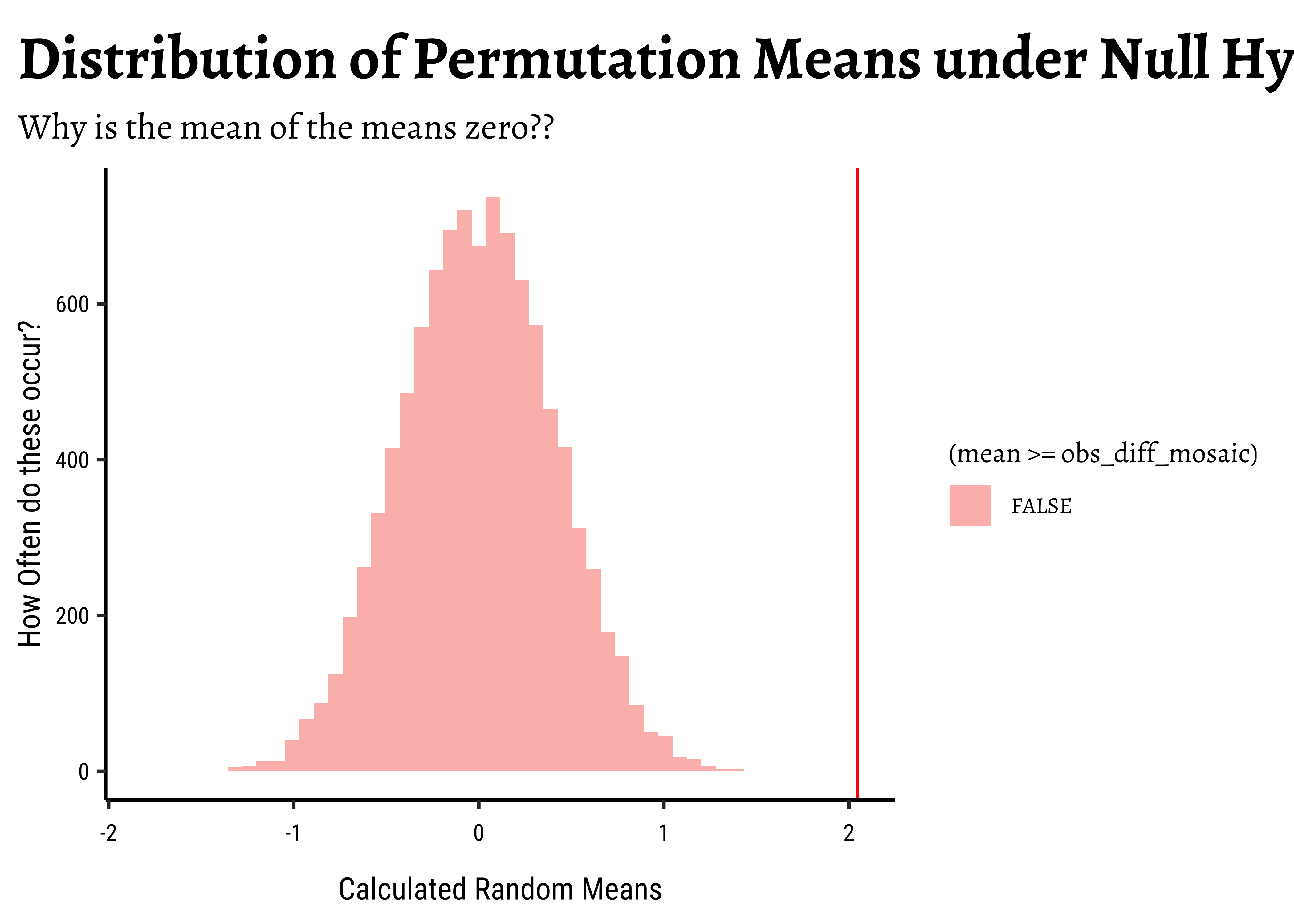

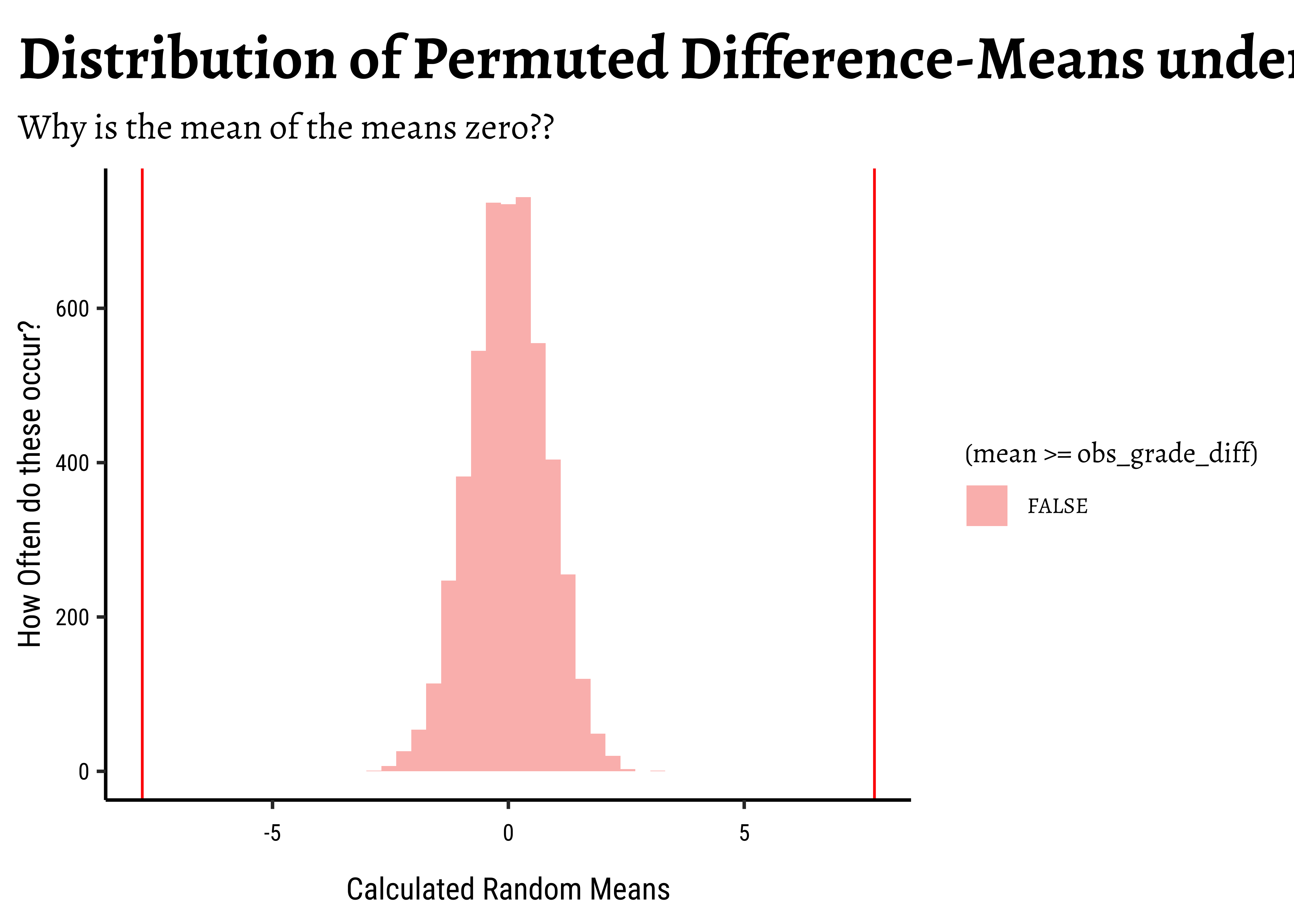

For the Permutation test, we mechanize our belief that

We see that the means here that chances that the randomly generated means can exceed our real-world mean are about

# Set graph theme

theme_set(new = theme_custom())

#

# Calculate exact mean

obs_mean <- mean(~y, data = mydata)

belief1 <- 0 # What we think the mean is

obs_diff_mosaic <- obs_mean - belief1

obs_diff_mosaic[1] 2.045689## Steps in Permutation Test

## Repeatedly Shuffle polarities of data observations

## Take means

## Compare all means with the real-world observed one

null_dist_mosaic <-

mosaic::do(9999) * mean(

~ abs(y) *

sample(c(-1, 1), # +/- 1s multiply y

length(y), # How many +/- 1s?

replace = T

), # select with replacement

data = mydata

)

##

range(null_dist_mosaic$mean)[1] -1.754293 1.473298##

## Plot this NULL distribution

gf_histogram(

~mean,

data = null_dist_mosaic,

fill = ~ (mean >= obs_diff_mosaic),

bins = 50, title = "Distribution of Permutation Means under Null Hypothesis",

subtitle = "Why is the mean of the means zero??"

) %>%

gf_labs(

x = "Calculated Random Means",

y = "How Often do these occur?"

) %>%

gf_vline(xintercept = obs_diff_mosaic, colour = "red")# p-value

# Null distributions are always centered around zero. Why?

prop(~ mean >= obs_diff_mosaic,

data = null_dist_mosaic

)prop_TRUE

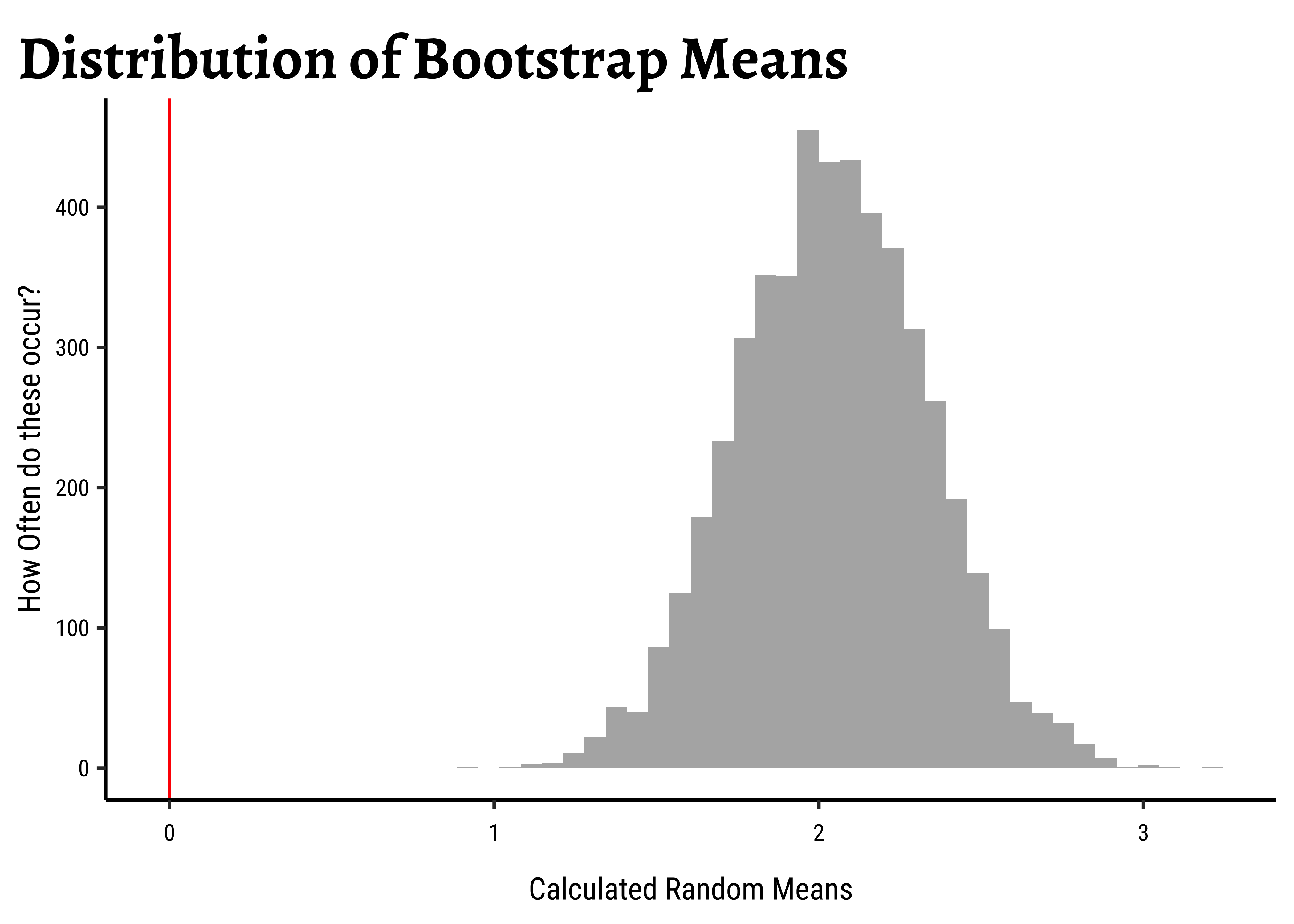

0 Let us try the bootstrap test now: Here we simulate samples, similar to the one at hand, using repeated sampling the sample itself, with replacement, a process known as bootstrapping, or bootstrap sampling.

# Set graph theme

theme_set(new = theme_custom())

##

## Resample with replacement from the one sample of 50

## Calculate the mean each time

null_toy_bs <- mosaic::do(4999) *

mean(

~ sample(y,

replace = T

), # select with replacement

data = mydata

)

## Plot this NULL distribution

gf_histogram(

~mean,

data = null_toy_bs,

bins = 50,

title = "Distribution of Bootstrap Means"

) %>%

gf_labs(

x = "Calculated Random Means",

y = "How Often do these occur?"

) %>%

gf_vline(xintercept = ~belief1, colour = "red")prop_TRUE

1 There is a difference between the two. The bootstrap test uses the sample at hand to generate many similar samples without access to the population, and calculates the statistic needed (i.e. mean). No Hypothesis is stated. The distribution of bootstrap samples looks “similar” to that we might obtain by repeatedly sampling the population itself. (centred around a population parameter, i.e.

The permutation test generates many permutations of the data and generates appropriates measures/statistics under the NULL hypothesis. Which is why the permutation test has a NULL distribution centered at

As student Sneha Manu Jacob remarked in class, Permutation flips the signs of the data values in our sample; Bootstrap flips the number of times each data value is (re)used. Good Insight!!

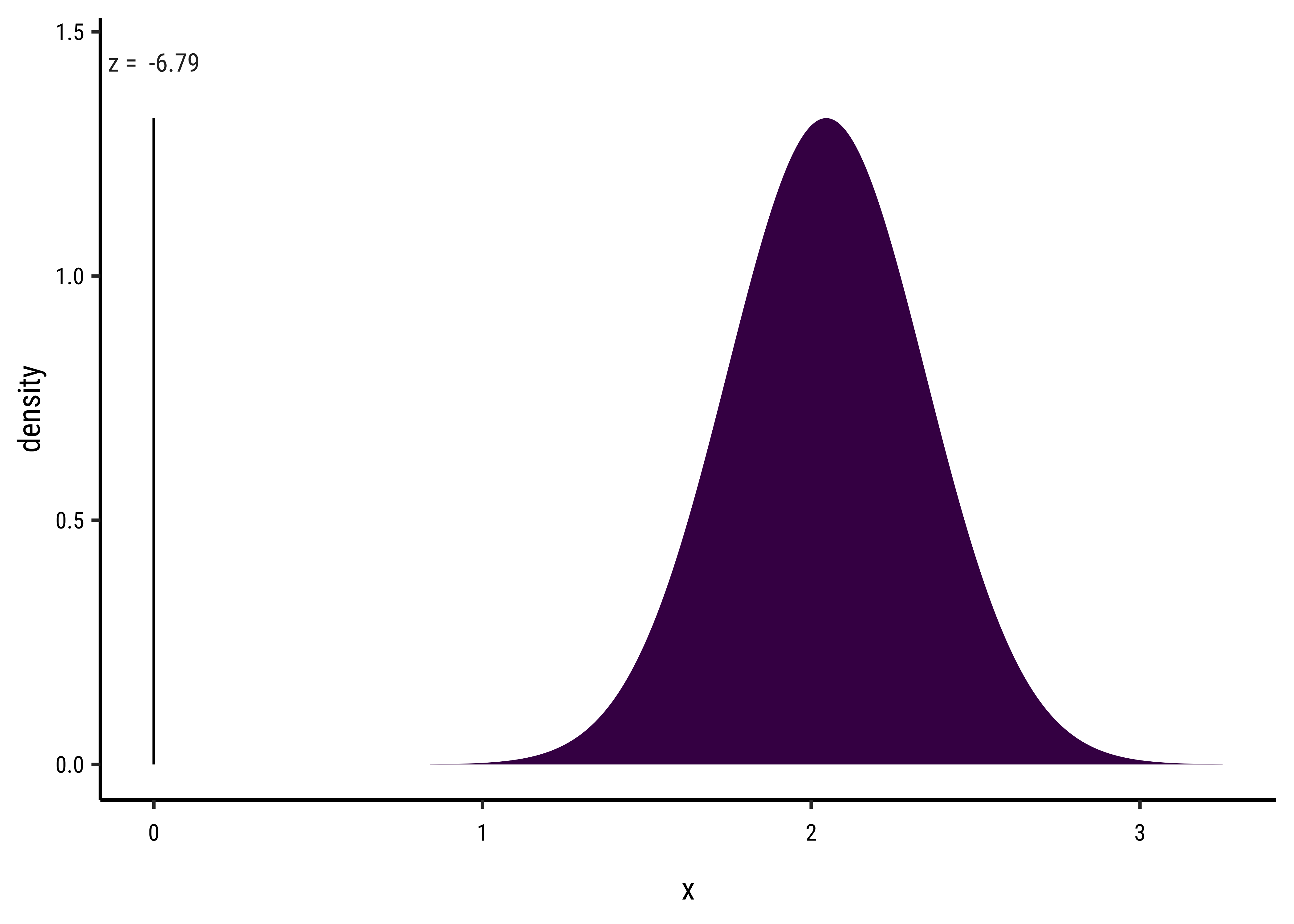

Yes, the t-test works, but what is really happening under the hood of the t-test? The inner mechanism of the t-test can be stated in the following steps:

- Calculate the

meanof the sample - Calculate the

sdof the sample, and, assuming the sample is normally distributed, calculate thestandard error(i.e. - Take the difference between the sample mean

- We expect that the population mean ought to be within the

confidence intervalof the sample mean - For a normally distributed sample, the confidence interval is given by

- Therefore if the difference between actual and believed is far beyond the confidence interval, hmm…we cannot think our belief is correct and we change our opinion.

Let us translate that mouthful into calculations!

mean_belief_pop <- 0.0 # Assert our belief

# Sample Mean

mean_y <- mean(y)

mean_y[1] 2.045689[1] 0.3014752## Confidence Interval of Observed Mean

conf_int <- tibble(ci_low = mean_y - 1.96 * std_error, ci_high = mean_y + 1.96 * std_error)

conf_intci_low <dbl> | ci_high <dbl> | |||

|---|---|---|---|---|

| 1.454798 | 2.63658 |

## Difference between actual and believed mean

mean_diff <- mean_y - mean_belief_pop

mean_diff[1] 2.045689## Test Statistic

t <- mean_diff / std_error

t[1] 6.785596We see that the difference between means is 6.78 times the std_error! At a distance of 1.96 (either way) the probability of this data happening by chance already drops to 5%!! At this distance of 6.78, we would have negligible probability of this data occurring by chance!

How can we visualize this?

If X ~ N(2.046, 0.3015), then P(X <= 1.443e-07) = P(Z <= -6.786) = 5.78e-12 P(X > 1.443e-07) = P(Z > -6.786) = 1

Let us now choose a dataset from the openintro package:

data("exam_grades")

exam_gradessemester <chr> | sex <chr> | exam1 <dbl> | exam2 <dbl> | exam3 <dbl> | course_grade <dbl> |

|---|---|---|---|---|---|

| 2000-1 | Man | 84.5000 | 69.5 | 86.5000 | 76.2564 |

| 2000-1 | Man | 80.0000 | 74.0 | 67.0000 | 75.3882 |

| 2000-1 | Man | 56.0000 | 70.0 | 71.5000 | 67.0564 |

| 2000-1 | Man | 64.0000 | 61.0 | 67.5000 | 63.4538 |

| 2000-1 | Man | 90.5000 | 72.5 | 75.0000 | 72.3949 |

| 2000-1 | Man | 74.0000 | 78.5 | 84.5000 | 71.4128 |

| 2000-1 | Man | 60.5000 | 44.0 | 58.0000 | 56.0949 |

| 2000-1 | Man | 89.0000 | 82.0 | 88.0000 | 78.0103 |

| 2000-1 | Woman | 87.5000 | 86.5 | 95.0000 | 82.9026 |

| 2000-1 | Man | 91.0000 | 98.0 | 88.0000 | 89.0846 |

Research Question

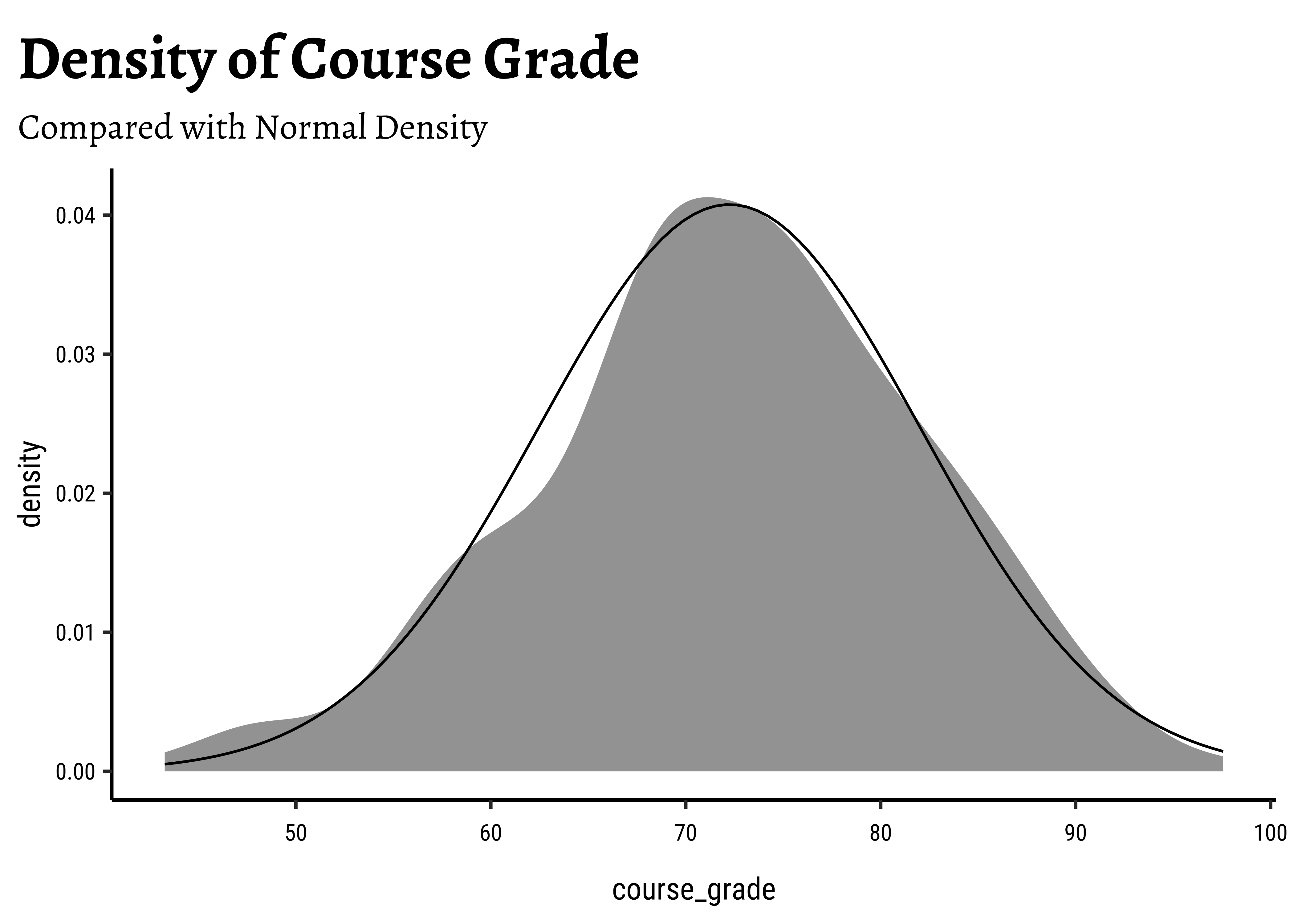

There are quite a few Quant variables in the data. Let us choose course_grade as our variable of interest. What might we wish to find out?

In general, the Teacher in this class is overly generous with grades unlike others we know of, and so the average course-grade is equal to 80% !!

# Set graph theme

theme_set(new = theme_custom())

#

exam_grades %>%

gf_density(~course_grade) %>%

gf_fitdistr(dist = "dnorm") %>%

gf_labs(

title = "Density of Course Grade",

subtitle = "Compared with Normal Density"

)Hmm…data looks normally distributed. But this time we will not merely trust our eyes, but do a test for it.

Testing Assumptions in the Data

stats::shapiro.test(x = exam_grades$course_grade) %>%

broom::tidy()statistic <dbl> | p.value <dbl> | method <chr> | ||

|---|---|---|---|---|

| 0.9939453 | 0.470688 | Shapiro-Wilk normality test |

The Shapiro-Wilkes Test tests whether a data variable is normally distributed or not. Without digging into the maths of it, let us say it makes the assumption that the variable is so distributed and then computes the probability of how likely this is. So a high p-value (

When we have large Quant variables ( i.e. with length >= 5000), the shapiro.test does not work, and we use an Anderson-Darling3 test to confirm normality:

library(nortest)

# Especially when we have >= 5000 observations

nortest::ad.test(x = exam_grades$course_grade) %>%

broom::tidy()statistic <dbl> | p.value <dbl> | method <chr> | ||

|---|---|---|---|---|

| 0.3306555 | 0.5118521 | Anderson-Darling normality test |

So course_grade is a normally-distributed variable. There are no exceptional students! Hmph!

Inference

A. Model

We have that

B. Code

# t-test

t4 <- mosaic::t_test(

exam_grades$course_grade, # Name of variable

mu = 80, # belief

alternative = "two.sided"

) %>% # Check both sides

broom::tidy()

t4estimate <dbl> | statistic <dbl> | p.value <dbl> | parameter <dbl> | conf.low <dbl> | conf.high <dbl> | method <chr> | alternative <chr> |

|---|---|---|---|---|---|---|---|

| 72.23883 | -12.07999 | 2.187064e-26 | 232 | 70.97299 | 73.50467 | One Sample t-test | two.sided |

So, we can reject the NULL Hypothesis that the average grade in the population of students who have taken this class is 80, since there is a minuscule chance that we would see an observed sample mean of 72.238, if the population mean

# t-test

t5 <- wilcox.test(

exam_grades$course_grade, # Name of variable

mu = 90, # belief

alternative = "two.sided",

conf.int = TRUE,

conf.level = 0.95

) %>% # Check both sides

broom::tidy() # Make results presentable, and plottable!!

t5estimate <dbl> | statistic <dbl> | p.value <dbl> | conf.low <dbl> | conf.high <dbl> | method <chr> | alternative <chr> |

|---|---|---|---|---|---|---|

| 72.42511 | 75 | 1.487917e-39 | 71.15002 | 73.71426 | Wilcoxon signed rank test with continuity correction | two.sided |

This test too suggests that the average course grade is different from 80.

Note that we have computed whether the average course_grade is generally different from 80 for this Teacher. We could have computed whether it is greater, or lesser than 80 ( or any other number too). Read this article for why it is better to do a “two.sided” test in most cases.

# Set graph theme

theme_set(new = theme_custom())

#

# Calculate exact mean

obs_mean_grade <- mean(~course_grade, data = exam_grades)

belief <- 80

obs_grade_diff <- belief - obs_mean_grade

## Steps in a Permutation Test

## Repeatedly Shuffle polarities of data observations

## Take means

## Compare all means with the real-world observed one

null_dist_grade <-

mosaic::do(4999) *

mean(

~ (course_grade - belief) *

sample(c(-1, 1), # +/- 1s multiply y

length(course_grade), # How many +/- 1s?

replace = T

), # select with replacement

data = exam_grades

)

## Plot this NULL distribution

gf_histogram(

~mean,

data = null_dist_grade,

fill = ~ (mean >= obs_grade_diff),

bins = 50,

title = "Distribution of Permuted Difference-Means under Null Hypothesis",

subtitle = "Why is the mean of the means zero??"

) %>%

gf_labs(

x = "Calculated Random Means",

y = "How Often do these occur?"

) %>%

gf_vline(xintercept = obs_grade_diff, colour = "red") %>%

gf_vline(xintercept = -obs_grade_diff, colour = "red")# p-value

# Permutation distributions are always centered around zero. Why?

prop(~ mean >= obs_grade_diff,

data = null_dist_grade

) +

prop(~ mean <= -obs_grade_diff,

data = null_dist_grade

)prop_TRUE

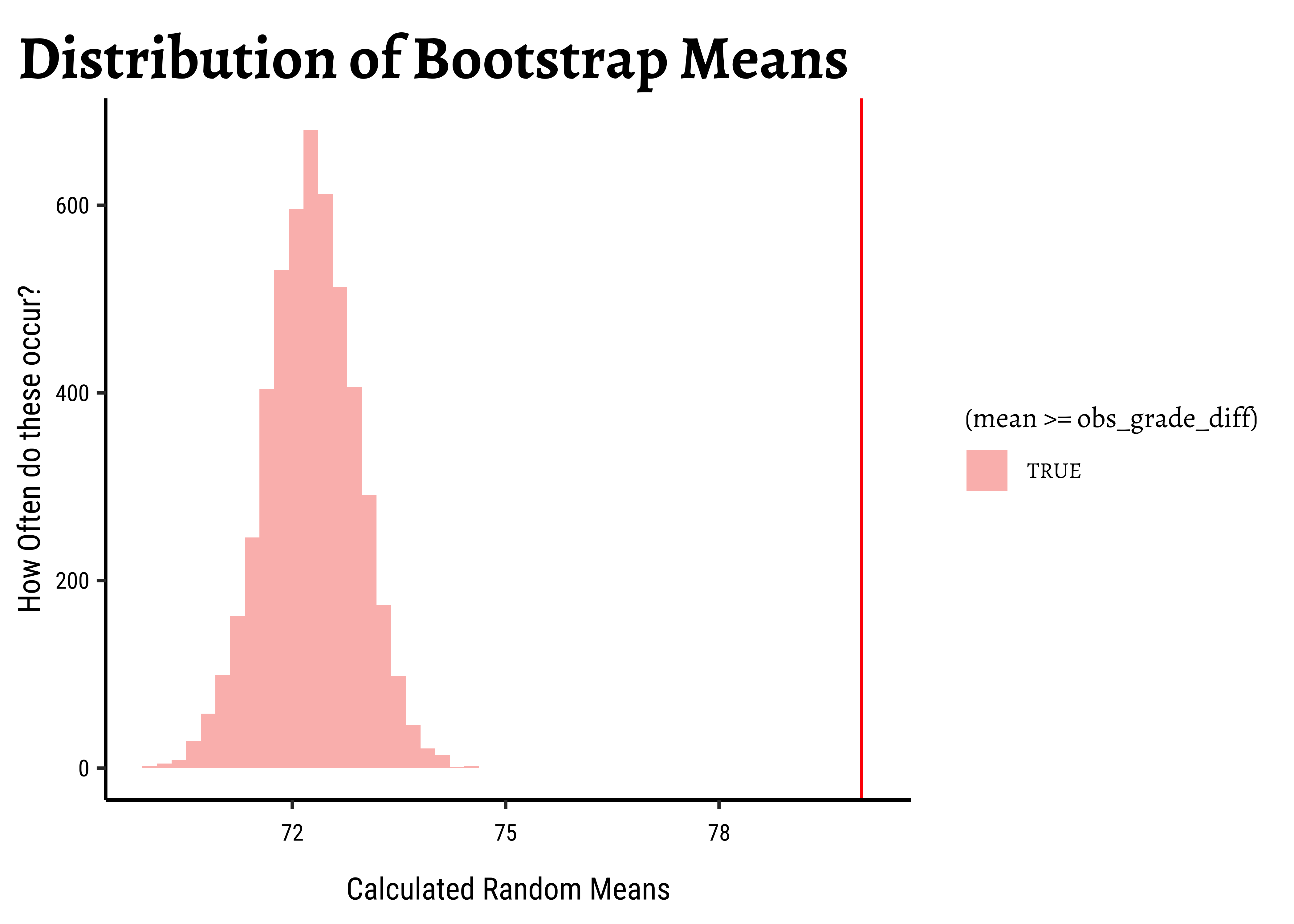

0 And let us now do the bootstrap test:

null_grade_bs <- mosaic::do(4999) *

mean(

~ sample(course_grade,

replace = T

), # select with replacement

data = exam_grades

)

## Plot this NULL distribution

gf_histogram(

~mean,

data = null_grade_bs,

fill = ~ (mean >= obs_grade_diff),

bins = 50,

title = "Distribution of Bootstrap Means"

) %>%

gf_labs(

x = "Calculated Random Means",

y = "How Often do these occur?"

) %>%

gf_vline(xintercept = ~belief, colour = "red")prop_TRUE

0 The permutation test shows that we are not able to “generate” the believed mean-difference with any of the permutations. Likewise with the bootstrap, we are not able to hit the believed mean with any of the bootstrap samples.

Hence there is no reason to believe that the belief (80) might be a reasonable one and we reject our NULL Hypothesis that the mean is equal to 80.

A series of tests deal with one mean value of a sample. The idea is to evaluate whether that mean is representative of the mean of the underlying population. Depending upon the nature of the (single) variable, the test that can be used are as follows:

- We can only sample from a population, and calculate sample statistics

- But we still want to know about population parameters

- All our tests and measures of uncertainty with samples are aimed at obtaining a confident measure of a population parameter.

- Means are the first on the list!

- If samples are normally distributed, we use a t.test.

- Else we try non-parametric tests such as the Wilcoxon test.

- Since we now have compute power at our fingertips, we can leave off considerations of normality and simply proceed with either a permutation or a boostrap test.

- OpenIntro Modern Statistics, Chapter #17

- Bootstrap based Inference using the

inferpackage: https://infer.netlify.app/articles/t_test - Michael Clark & Seth Berry. Models Demystified: A Practical Guide from t-tests to Deep Learning. https://m-clark.github.io/book-of-models/

- University of Warwickshire. SAMPLING: Searching for the Approximation Method use to Perform rational inference by Individuals and Groups. https://sampling.warwick.ac.uk/#Overview

Footnotes

Citation

@online{v.2022,

author = {V., Arvind},

title = {🃏 {Inference} for a {Single} {Mean}},

date = {2022-11-10},

url = {https://av-quarto.netlify.app/content/courses/Analytics/Inference/Modules/100-OneMean/},

langid = {en},

abstract = {Inference Tests for a Single population Mean}

}